How Facebook Influences Our Political Beliefs — And How to Beat the Algorithm

Since Mark Zuckerberg founded it in 2004, Facebook has become an increasingly important part of millennials‘ lives. Facebook is a platform where people share photos, random thoughts, interesting articles, and, as plenty of us saw during the 2016 presidential election, a ton of political thoughts.

What we share on Facebook, including our political beliefs, doesn’t just get shared with the friends we’re connected with on the site, though. Facebook keeps very close tabs on everyone’s interests, which it turns into data that the site then uses to curate what we see, from posts by friends and family to targeted political ads. You probably already knew this. But it goes way deeper than you may have thought.

How Your Feed Gets Made

Facebook curates the feeds of its users based on what they like and are interested in, which means advertisers can get more bang for their buck. Facebook’s clever algorithm puts advertisers’ ads in front of people who are more likely to be attracted to what they’re promoting, whether that’s a mayoral campaign or a sale on sweaters.

But Facebook’s use of algorithms has also led to the creation of online “political bubbles” or “echo chambers,” where people primarily see content that already aligns with their interests or beliefs. This isn’t necessarily always a problem in and of itself; after all, who wants to see a bunch of stuff they just don’t care about? But as we’ve learned from the major Russian misinformation scandal that involved Facebook, Twitter, and Google, being stuck in a bubble can lead to users seeing a lot of highly biased and sometimes false political posts.

So how does Facebook decide what a person sees when they log in? You may recall that Facebook used to show posts in chronological order, but that fell by the wayside long ago. Now, the infamous algorithm decides what Facebook users do see and don’t see. And, as we mentioned earlier, the algorithm Facebook uses to determine which content goes on a person’s newsfeed is based on the collection of a lot of data.

According to How to Geek, there are “thousands” of factors that go into determining what goes into a newsfeed. These include: who you interact with the most and what they’re interested in, the type of content you tend to interact with (for example, articles versus videos), what you like and share, and what you list you’re interested in on the “about” section of your own profile. Facebook can also track your web browsing habits to collect data for its own use.

Is there a way to know what facebook wants to show you?

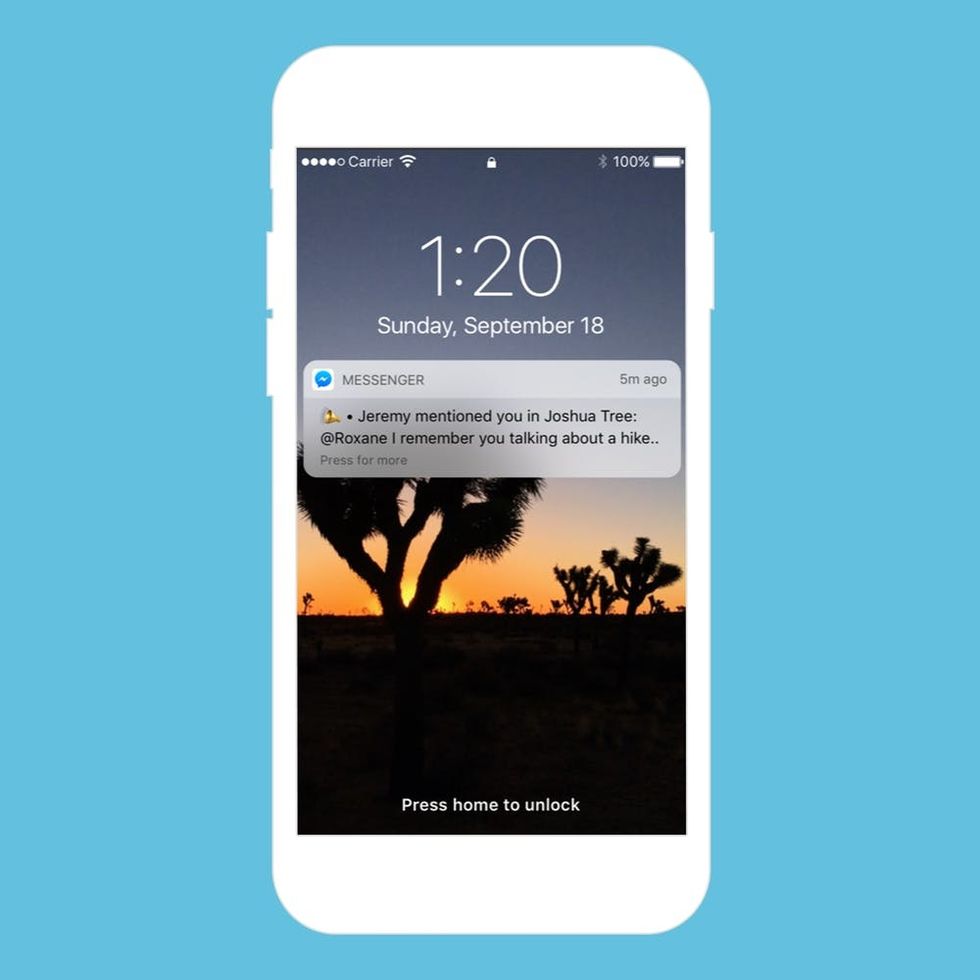

There IS a way to see what Facebook thinks you’re interested in, which can help you anticipate the types of ads Facebook will send your way. To check this out for yourself, log into Facebook, and head to facebook.com/adpreferences. There you’ll see several different categories of interests that Facebook uses to determine which ads you see, from “News and entertainment” to “Food and drink.” Weirdly, Facebook nestles your political preferences under a category labeled “Lifestyle and culture.” You won’t see this category on the main menu of this page, so click on “More” to find it. You have the option to remove different interests from your ad preferences on this page.

Ad preferences are part of the problem, but there’s more: According to a 2015 study published in the journal Proceedings of the National Academy of Sciences of the United States of America, if a Facebook user is interacting regularly with — or expresses an interest in — conspiracy theories, the algorithm is just going to keep showing them more misinformation. In short: fake news.

But the algorithm works the same way for run-of-the-mill liberal and conservative content too. Whatever it is Facebook “thinks” you’re interested in, that’s what Facebook will show you. Some experts have argued that the “Facebook bubble” is why Donald Trump’s presidential victory was such a shock to so many people on the left: They simply had no idea how popular Trump actually was because they weren’t seeing information about him or his supporters on social media.

Political interests determined by Facebook also informed whether Facebook users saw Russian-created misinformation posts during the 2016 presidential election season. Based on certain interests (publications and political issues, etc.), Kremlin-funded misinformation was spread to certain users. TechCrunch reports that people of various perceived political persuasions were targeted both on the left and the right.

How to See What People Are Saying Outside of Your “Bubble”

Juana Summers, a political editor for CNN, told KQED last year that there are some simple steps social media users can take to make sure they know what’s going on outside their own bubble. It’s not that you necessarily have to adopt harmful beliefs, or even understand them, but just to know what’s going on in the world. Summers recommends reading at least one article a week that you “violently disagree with,” follow experts on social media (people you can trust not to spread false information), fact-check before sharing posts (the website Snopes is great for this purpose), and to do research before committing to an opinion on something.

As for ads on Facebook, you can somewhat limit the ad tracking Facebook is capable of by going to www.facebook.com/ads/preferences, clicking on “Ad settings,” and changing the settings from “yes” to “no.”

There’s a lot of info rolling around on Facebook, and not all of it is good or helpful. Pretty much everything we do on Facebook (and also just browsing the internet) is used by the platform to determine which information we get to see in our newsfeeds. Since Facebook probably won’t ever give up on its algorithm, it’s on us users to make sure the information we’re sharing is accurate, and to go the extra mile to check out what’s going on outside our own bubbles. It might even help us feel less divided.

What do you think? Tell us on Twitter @BritandCo.

(Images via Getty)